Data

The Runescape API provides price and category data, but does not provide any other attributes such as other pricing or trading metrics. Using their provided API, we first scraped this and arrived at a daily updated times series list of prices for all 4106 tradeable virtual items over the course of 205 days, each with a category attribute attached.

Final Feature Set

After an iterative feature set creation process, we settled on a delta approach feature set. This delta approach feature set contained summary variables based on adjusted daily changes as a set of changes over 30 days as follows:

% change from today to yesterday

% change from yesterday to 2 days ago

…

% change from 28 days ago to 29 days ago

Using this set, we predict the direction (e.g. up/down/same) of the change, and also the exact % change (e.g. --2%,0,+1%) for the next day. This way, we get to test regression and classification, and see how a trade off in prediction exactness (for just predicting a direction without giving a magnitude) can affect accuracy. We used many learners including Decision Trees, Nearest neighbor, and Multilayer Perceptrons.

Enhancing Model Generated Predictions

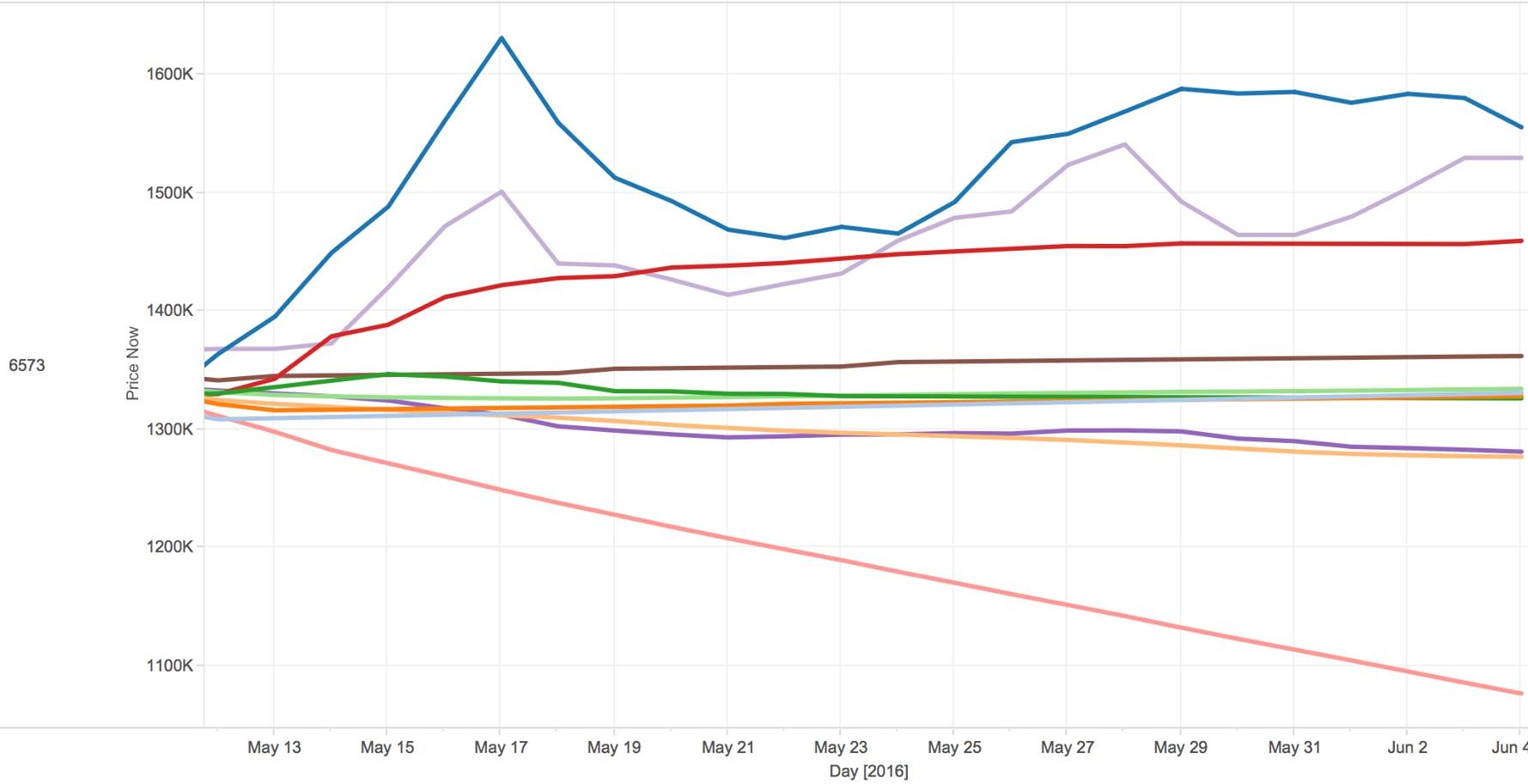

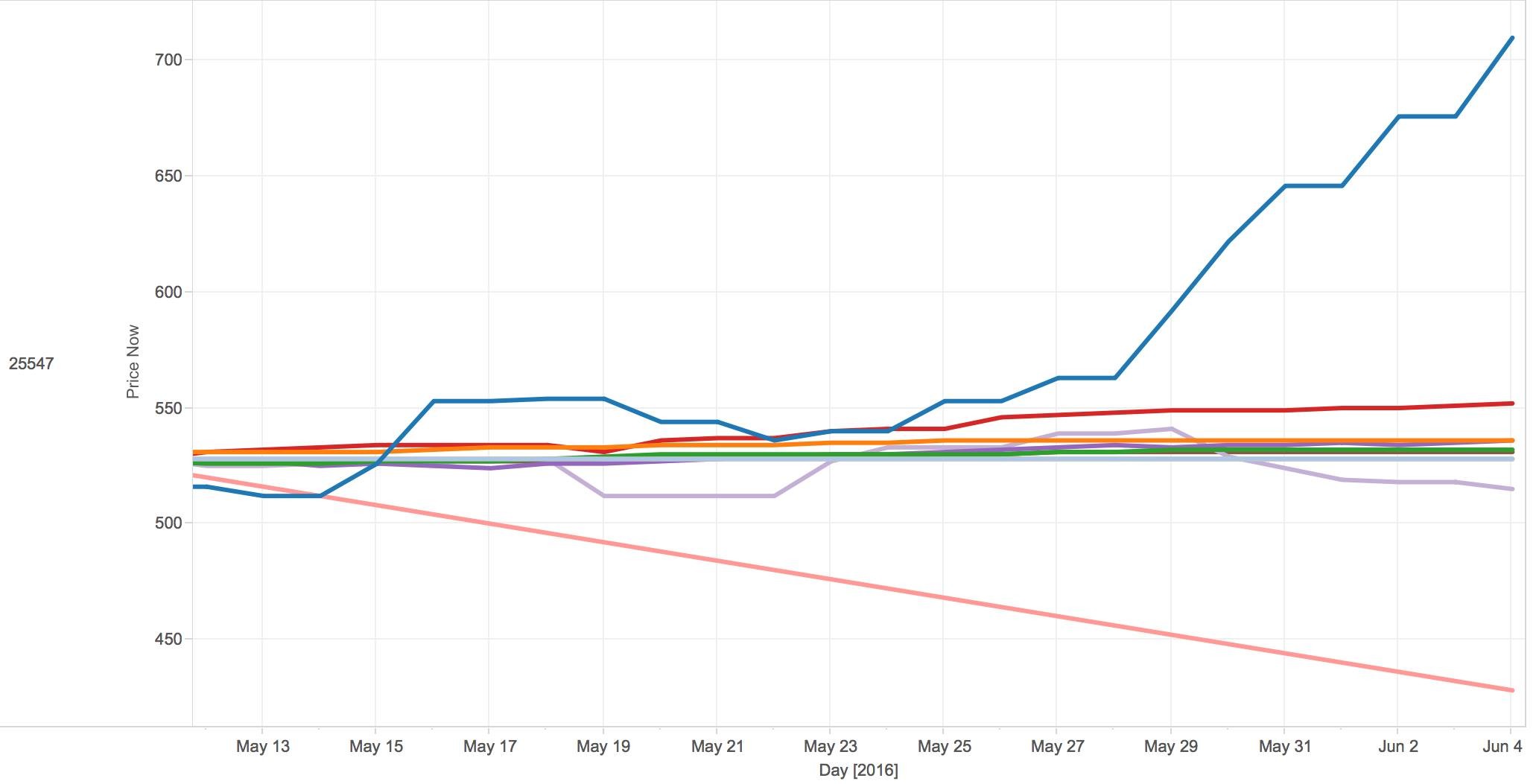

To test how models can predict farther into the future, we also created a long term predictor which iteratively fed results repeatedly through the 1 day regressor to predict prices up to 25 days into the future.